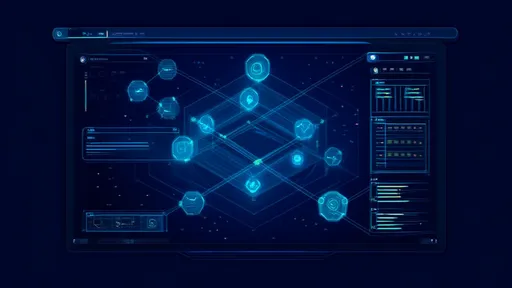

The evolution of data management has entered a new phase with the emergence of the Lakehouse architecture, a paradigm that seeks to unify the best aspects of data lakes and data warehouses. As organizations increasingly adopt this hybrid approach, the need to evaluate its maturity becomes paramount. A maturity assessment framework for Lakehouse architecture provides a structured way to gauge how well an organization is leveraging this model to drive value, ensure scalability, and maintain robustness in its data operations.

At its core, the Lakehouse architecture aims to address the longstanding trade-offs between flexibility and performance. Data lakes have traditionally excelled at storing vast amounts of raw, unstructured data at low cost, but they often fall short when it comes to supporting high-performance analytics and transactional consistency. On the other hand, data warehouses offer strong performance and governance for structured data but can be inflexible and expensive for diverse data types. The Lakehouse model integrates these capabilities, enabling organizations to store data in open formats while supporting ACID transactions, advanced analytics, and machine learning workloads.

Assessing the maturity of a Lakehouse implementation involves examining multiple dimensions, including data governance, storage efficiency, processing capabilities, and integration with existing systems. A mature Lakehouse architecture not only combines storage and compute effectively but also ensures that data is accessible, reliable, and secure. It empowers data teams to collaborate seamlessly, from data engineers and scientists to business analysts, fostering a culture of data-driven decision-making across the enterprise.

One critical aspect of maturity is data governance and quality. In a Lakehouse environment, governance must extend across both structured and unstructured data, enforcing policies for data lineage, metadata management, and compliance. Organizations with high maturity in this area implement automated data quality checks, role-based access controls, and comprehensive auditing mechanisms. They treat data as a strategic asset, ensuring that it is trustworthy and used responsibly in line with regulatory requirements such as GDPR or CCPA.

Another key dimension is storage and processing efficiency. A mature Lakehouse leverages modern data formats like Apache Parquet or Delta Lake to optimize storage and query performance. It employs techniques such as data partitioning, indexing, and caching to minimize latency and reduce costs. Moreover, it supports elastic scaling of compute resources, allowing organizations to handle varying workloads without over-provisioning infrastructure. This efficiency is crucial for supporting real-time analytics and machine learning pipelines at scale.

Integration capabilities also play a vital role in maturity assessment. A well-architected Lakehouse seamlessly connects with a variety of data sources and sinks, from cloud storage and streaming platforms to traditional databases and business intelligence tools. It provides unified APIs and connectors that simplify data ingestion and consumption, reducing the complexity of data pipelines. High maturity in integration ensures that the Lakehouse acts as a central hub for all data activities, eliminating silos and promoting interoperability across the ecosystem.

Furthermore, the maturity of a Lakehouse architecture is reflected in its support for advanced analytics and machine learning. Organizations at an advanced stage enable data scientists to build, train, and deploy models directly on the Lakehouse, leveraging integrated tools and frameworks. They facilitate collaborative workflows with version control, experiment tracking, and model governance, accelerating the time-to-insight and fostering innovation. This capability transforms the Lakehouse from a passive storage repository into an active engine for predictive and prescriptive analytics.

Scalability and performance are additional indicators of maturity. A mature Lakehouse architecture is designed to handle exponential data growth without compromising on speed or reliability. It employs distributed processing engines like Apache Spark or Presto to execute complex queries efficiently, even across petabytes of data. Performance tuning, monitoring, and optimization are ongoing practices, ensuring that the system meets the evolving needs of the business. This scalability is essential for supporting large-scale applications, from customer analytics to IoT data processing.

Security is another cornerstone of Lakehouse maturity. A robust security framework encompasses encryption at rest and in transit, network isolation, and threat detection. It includes fine-grained access controls that restrict data exposure based on user roles and contexts. Organizations with high maturity adopt a zero-trust approach, continuously monitoring for anomalies and vulnerabilities. They also ensure that security policies are consistently enforced across hybrid and multi-cloud deployments, safeguarding sensitive data against breaches and unauthorized access.

Operational excellence is the final piece of the puzzle. Mature Lakehouse implementations embrace DevOps and DataOps practices, automating deployment, testing, and maintenance processes. They use infrastructure-as-code tools to manage resources reproducibly and incorporate continuous integration and delivery pipelines for data applications. This operational rigor minimizes downtime, enhances agility, and allows teams to iterate quickly on data solutions. It also includes comprehensive logging, alerting, and disaster recovery plans to maintain high availability and resilience.

In conclusion, evaluating the maturity of a Lakehouse architecture is not a one-time exercise but an ongoing journey. It requires a holistic view of how data is managed, processed, and utilized to create business value. Organizations that invest in maturing their Lakehouse capabilities position themselves to harness the full potential of their data assets, driving innovation and competitive advantage. As the technology landscape continues to evolve, those with a mature Lakehouse foundation will be better equipped to adapt to new challenges and opportunities in the data-driven era.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025