The financial industry's battle against fraud has entered a new technological frontier, moving decisively beyond traditional rule-based systems and siloed data analysis. In this high-stakes environment, graph databases have emerged not merely as a tool, but as a foundational technology reshaping how institutions understand and combat sophisticated fraudulent networks. The inherent structure of graph technology, which focuses on the relationships between entities—be they people, transactions, devices, or locations—provides a uniquely powerful lens through which to detect patterns that would otherwise remain invisible in rows and columns of traditional databases.

Consider a typical credit card application fraud scenario. A traditional system might flag an application based on a single data point, such as an invalid phone number. However, modern fraud rings are complex and adaptive. They use valid, but stolen, identities, often creating a web of subtle connections across numerous applications. A graph database excels here by mapping the entire ecosystem of an application. It can instantly reveal that the same device ID has been used to submit twenty other applications from different names, all of which list the same apartment building as a home address, and all of which link back to a handful of IP addresses originating from a previously flagged suspicious network. This holistic view of connectedness is the critical advantage.

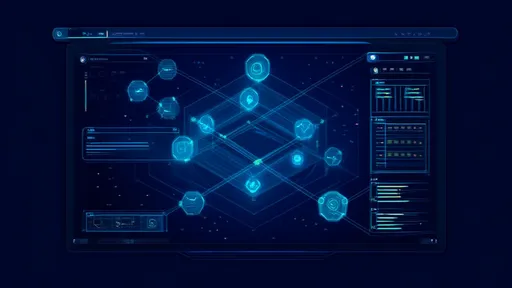

The power of this approach is amplified through the construction of detailed knowledge graphs. A knowledge graph is a dynamic model of a domain, built with graph technology, that integrates data from disparate sources into a unified, interconnected web of intelligence. For a financial institution, this means merging data from core banking systems, transaction feeds, public records, watchlists, and even news sources into a single, queryable graph. This creates a 360-degree view of each customer and the networks they operate within. The knowledge graph becomes a living representation of the financial ecosystem, where the relationships are as valuable as the data points themselves.

In practice, the implementation of a graph-powered anti-fraud system is a multi-layered process. The first step involves data ingestion and harmonization, where raw data from various silos is cleaned, normalized, and loaded into the graph model. Entities like users, accounts, credit cards, and merchants become nodes, while the interactions between them—a payment, a login attempt, a shared attribute—become the edges that connect them. This model is continuously updated in real-time, ensuring the knowledge graph reflects the most current state of activity.

With the graph established, fraud detection moves from a reactive to a proactive and predictive stance. Pattern-matching algorithms, such as community detection, are run across the graph to identify tightly knit clusters of entities that exhibit suspicious behaviors indicative of a coordinated fraud ring. Link analysis techniques can uncover hidden pathways between a known money mule account and a seemingly legitimate new customer, revealing the entire chain of illicit activity. The system can calculate a relationship strength score or measure the shortest path between an entity and a known fraudulent element, assigning dynamic risk scores that evolve with each new interaction.

The real-world efficacy of this technology is no longer theoretical. Major global banks have publicly detailed their success stories. One European bank implemented a graph-based solution to tackle first-party fraud—where individuals falsely claim they did not authorize a transaction. By analyzing the graph of transactions, device fingerprints, and behavioral biometrics, the bank could see that a "disputed" transaction originated from a device that had been used consistently by the account holder for months and was connected to the user's home WiFi network at the time of the transaction. This visual proof drastically reduced false claims and saved millions annually.

Another compelling case involves a large e-commerce platform battling organized refund fraud rings. These groups would make purchases using stolen credit cards, receive the goods, and then request refunds to a different payment method. By building a knowledge graph that connected purchase history, shipping addresses, customer service contact details, and refund accounts, the platform identified complex networks where hundreds of seemingly unrelated accounts were all funneling refunds to the same handful of digital wallets. The graph made the collective action of the ring unmistakable, leading to its dismantlement.

Beyond specific fraud types, graph databases are revolutionizing anti-money laundering (AML) compliance. Traditional AML systems are notorious for generating a high volume of false positive alerts, requiring immense manual effort for investigation. Graph analytics transform this process. An investigator receives an alert on a potentially suspicious transaction. Instead of looking at that transaction in isolation, they can immediately explore the graph to see the entire context: the sender's network, the recipient's connections, the flow of funds across multiple hops, and any links to politically exposed persons or sanctioned entities. This contextual intelligence allows for faster, more accurate decisions, reducing operational costs and increasing the catch rate for true money laundering schemes.

The future trajectory of graph technology in fraud prevention points toward even greater integration with artificial intelligence and machine learning. Graph neural networks (GNNs), a cutting-edge AI technique designed specifically for graph data, can learn the subtle, latent patterns of fraudulent behavior directly from the structure of the knowledge graph itself. This enables the system to detect novel and evolving fraud schemes without explicit human programming, continuously learning and adapting to the tactics of adversaries. The graph is shifting from a system of record to a system of intelligence.

In conclusion, the adoption of graph databases and knowledge graphs represents a paradigm shift in the fight against financial crime. It is a move from analyzing isolated data points to understanding complex, interconnected networks. This technology provides the depth of context, the speed of real-time analysis, and the scalability required to stay ahead of increasingly sophisticated fraudsters. As the digital economy grows and financial transactions become more complex, the ability to see and understand these connections will not just be a competitive advantage—it will be a fundamental necessity for security and trust.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025